Bias in Military (or Conservation) Funded Ocean Noise Research

Ocean, Science, Seismic Surveys, Sonar Add comments(this item first appeared in AEI’s lay summaries of new research)

Wade, Whitehead, Weilgart. Conflict of interest in research on anthropogenic noise and marine mammals: Does funding bias conclusions? Marine Policy 34 (2010) 320-327.

In the United States, the US Navy funds about 70% of the research into the effects of ocean noise on wildlife (and half, worldwide). For many years, conservation groups have questioned whether this preponderance of funding is skewing research results, whether by constraining the types of questions being studied, or by leading researchers to downplay negative impacts of noise in order to continue receiving funding. The authors of this new study report a significant correlation between Navy funding and results reporting “no effect” of noise, based on their analysis of several wide-ranging reviews of ocean noise science, and of the primary research papers cited in these reviews. While the data behind their conclusion is clearly explained, the results don’t look nearly as clear-cut to me; I question the comparability of the five reviews used, and while the trends in primary papers is more obvious, it’s hard to ignore the fact that the majority of military-funded papers still found that noise had effects. Indeed, as the authors make clear, it’s the conservation-funded reviews and primary research that is most clearly one-sided in its results (though there are good reasons for this, also fleshed out by the authors and in AEI’s commentary below). In AEI’s view, studies like this – and indeed, reviews such as those considered here – are diligent exercises in quantifying an issue that has become, for all practical purposes, an exercise in divergent world views and beliefs talking at and past each other.

This post includes more analysis and interpretation by AEI than we generally include in our science summaries; it’s a long read, but the issues that triggered this study are important ones. Though the clear-cut results reported here are difficult to take at face value, it is well worth considering the underlying forces that drive tensions between environmental groups and Navy/industry actions in the seas. While primary research and even literature reviews funded by the military don’t appear overly biased toward finding no effect (since in both cases, they include far more results showing effects than not), it remains that in practical terms, the EIS’s generated by the Navy and the mitigation measures imposed by regulators on both military and oil and gas activities are largely grounded in the belief – and regulatory determination – that any effects of these activities are “negligible,” to use the formal term. Thus the focus of the conservation community on funding research and publishing overviews that emphasize credible studies outlining observed negative effects is understandable.

Of special note is that the authors did not find any strong trend toward bias of results reported by independent, academic researchers receiving Navy funding for research studies – these studies showed a similar proportion of effect and no effect results as studies funded by neither the military nor conservation groups (though when comparing military-funded studies with all the others, including consevation-funded, a non-statisticially significant trend of 1.64 times more “no effect” findings was observed). This should diffuse widespread concerns that cash-strapped academic researchers are “cooking the books” or avoiding publishing negative findings in order to retain Navy funding (though it is perhaps unsurprising to note that few if any key Navy-funded scientists are among the researchers who are willing to speak out publicly to push for stronger regulations on ocean noise). The authors conclude that “much of the bias in military-funded research was in work carried out at military institutions, rather than in studies funded by the military but carried out at universities and other institutions.” Thus, research coming directly out of military offices is likely to remain less reliable as representing “the whole picture,” as may research entirely funded by conservation groups. Still, by integrating and considering the full range of studies reported in all of these reviews, the public can get a pretty decent picture of current state of our understanding of the effects of ocean noise.

Of note, though, is that the proportion of “no effect” to “effect” findings is slightly lower in military-funded studies. In addition, military-funded studies are three times as likely to report BOTH effects and lack of effects in a single paper; this could indicate either a more careful assessment of the margins where effects are just noticeable, or a tendency to split the difference in order to either underplay the effects or accentuate the non-effects to assuage funders.

While ocean noise issues came to public awareness after a series of stranding deaths and lawsuits, the fact is that deaths and injuries caused by noise are very rare. Even the leading environmental activists have shifted their focus, and today nearly all of the controversy over military and oil and gas noise boils down to differing interpretations of how important moderate behavioral changes are, and whether they should be avoided or not. And science is nearly incapable of shedding any definitive light on how important behavioral changes are, thus leaving the two sides largely reliant on their divergent faith: the Navy and oil industry’s faith that the behavioral changes are transient and negligible, and environmentalists’ faith that chronic behavioral disruption by human noise is bound to have negative consequences. Meanwhile, ethical questions about humanity’s relationship to the natural world are outside the bounds of discussion on one side, and central to the whole discussion on the other. This is not as black and white a picture as either side may paint, but it’s where we are.

For AEI’s full summary and discussion of this important new study, dive in below the fold…..

In the United States, the US Navy funds about 70% of the research into the effects of ocean noise on wildlife. For many years, conservation groups have questioned whether this preponderance of funding is skewing research results, whether by constraining the types of questions being studied, or by leading researchers to downplay negative impacts of noise in order to continue receiving funding. This paper investigates the latter possibility, by comparing five recent reviews of the effects of ocean noise on wildlife, one funded by a leading environmental group, three that received substantial Navy funding, and two funded by organizaitons with no particular stake in the debate. The researchers compared the number of citations in each that showed an effect of noise, or not, or that showed both an effect and lack of effect. They also attempted to identify funding sources of the primary papers cited in each of these secondary reports, and to correlate the funding source with the same three parameters (noise shown to have an effect, noise causing no effect, or aspects of the primary research showing both effects and no effect).

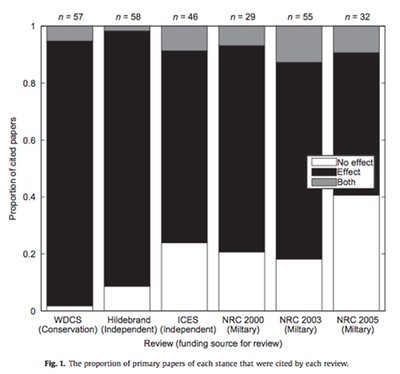

Using both simple citation counts and G-test statistical analysis to test for a null hypothesis of random connection between funding sources and outcomes, the authors report a significant correlation between funding source and proportion of citations reporting “no effect” of noise. The five review papers certainly show a trend, though it appears to AEI that three papers that form the anchors of that trend each may be somewhat shaped by its original intention.

Reviews: Proportion of citations of each stance

The authors recognize that the one conservation-funded paper (“Oceans of Noise”, WDCS) drew almost only on studies that did show an effect of noise, and re-analyzed the trend with that one excluded; a significant effect remained, though it was half as strong. However, one of the “independent” papers, a chapter in a book on the ocean environment (Hildebrand), was perhaps similarly constrained by its purpose; it was titled “Impacts of Anthropogenic Noise,” and was not necessarily intended as a comprehensive assessment of what does and does not cause an effect. On the other end of the spectrum of the five surveys, the only Navy-funded survey that diverged notably from the other independent paper (from ICES), was the third National Research Council report, which addressed the difficulties in determining when repeated behavioral disruptions of a portion of a population accumulates to the point of becoming “biologically significant.” Thus, this survey could be expected to include more papers that were exploring the edges of impacts, and so it is not surprising that to do so, more citations would appear that show no effect. Given these underlying intentions of the two reports showing the fewest “no effect” citations, and the one showing the most “no effect” citations, it appears that the five papers being compared are not quite on the same playing field. The other three papers (ICES and the first two NRC reports on noise and marine mammals) showed no marked differences in balance between citations, with the Navy-funded reports actually having a lower proportion of “no effect” papers and higher proportion of “effect” papers than the ICES survey.

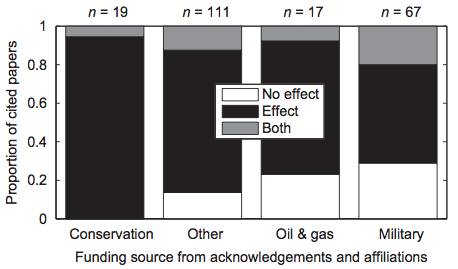

Primary papers: funding source and results reported

Turning to the primary papers, the situation is not muddied by the variable intentions of the reviews; in addition, roughly half the papers analyzed were not funded by any of the apparent interest groups, making for more a more robust “center” from which to compare. Here, there is a clearer trend of increasing conclusions of “no effect” as funding moves from conservation to no-agenda to industry and military funding. Again, the 19 conservation-funded papers nearly all reported an effect, with just one showing both effect and no effect to separate questions being investigated. The 67 military-funded papers, by contrast, were far more likely (2.3 times as likely) to come to a no-effect conclusion than papers funded by other (non-conservation and non-industry) sources. However, and significantly, less than 30% of military-funded papers came to that conclusion, while about 50% did report an effect of noise, and 20% showed both effects and lack of effects. Likewise, in the first two NRC reviews, about 20% of citations showed “no effect,” while over 60% showed an effect; even the most “extreme” review had more citations showing an effect than not.

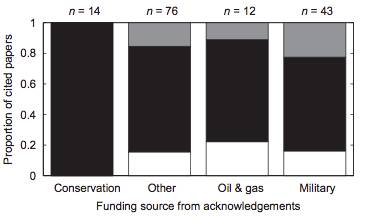

Of special note is that the authors did not find any strong trend toward bias of results reported by independent, academic researchers receiving Navy funding for research studies – these studies showed a similar proportion of effect and no effect results as studies funded by neither the military nor conservation groups (though when comparing military-funded studies with all the others, including consevation-funded, a non-statisticially significant trend of 1.64 times more “no effect” findings was observed).

Here, "military" funding represents studies conducted by non-military researchers, with military funding

As the authors stress in consideration of the possible bias of conservation-funded primary studies, conflicts of interest would be dangerous if scientists “orient(ed) their goals, methods, analysis or interpretation towards the perceived interests of the….community that funded them.” The authors conclude that “much of the bias in military-funded research was in work carried out at military institutions, rather than in studies funded by the military but carried out at universities and other institutions.” This seems to suggest that the concern about bias in funding alone is negligible, thus diffusing concerns that cash-strapped academic researchers are “cooking the books” to retain Navy funding. Research coming directly out of military offices is likely to remain less reliable as representing “the whole picture,” as may research entirely funded by conservation groups. Still, by integrating and considering the full range of studies reported in all of these reviews, the public can get a pretty decent picture of current state of our understanding of the effects of ocean noise.

Of note, though, is that the proportion of “no effect” to “effect” findings is slightly lower in military-funded studies. In addition, military-funded studies are three times as likely to report BOTH effects and lack of effects in a single paper (again, when compared to all others, including conservation-funded, which rarely showed both); this could indicate either a more careful assessment of the margins where effects are just noticeable, or a tendency to split the difference in order to either underplay the effects or accentuate the non-effects to assuage military funders.

Overall, at both layers of analysis, it seems clear to AEI that the conservation-funded papers and survey report showed the most obvious “bias.” However, this is not necessarily problematic (unless used as a baseline to suggest bias in others). As the authors note, “conservation groups do not fund research unless they have previously identified a potentially damaging effect,” and since conservation groups’ focus on precautionary approaches, and their mandate to “publicize activities that are potentially damaging to the environment” is quite transparent, it “should not be problematic unless threats are ‘hyped’ where there are none.” (Note: while non-existent threats are rarely hyped by conservation groups, a more difficult question arises when moderate or minor threats are presented to the public as more dramatic than they may be.) The authors note that while these results could suggest that conservation funding may be considered problematic, “the argument can be made that their role as a preventative authority is necessary.”

Indeed, while primary research and even survey reviews funded by the military are evidently not overly biased toward finding no effect (since in both cases, they include far more results showing effects than not), it also appears to AEI that in practical terms, the EIS’s generated by the Navy and the mitigation measures imposed by regulators on both military and oil and gas activities are largely grounded in the belief – and regulatory determination – that any effects of these activities are “negligible,” to use the formal term. Thus the focus of the conservation community on funding research and publishing overviews that emphasize credible studies outlining observed negative effects is understandable, given these groups’ role in raising public awareness and balancing the singular interpretation of the more nuanced research by the military and industry. It might be more fruitful to explore ways that the “balance” of Navy-funded studies and reviews serve as a fig leaf for actions that nearly always presume no harm. Another key question not yet considered with the rigor brought to this study is whether Navy-funded research is oriented toward studies that, by the questions being asked, may be more likely to come up with “no effect” findings, as apparently the investigations that conservationists tend to fund ask questions that lean toward those that are likely to show an effect. Similarly, the questions being asked can color the percieved importance of changing our ways of using sound in the sea. For example, a study might seek to identify “recoverable thresholds” of exposure (the maximum sound can an animal experience, causing temporary hearing shifts, but with their hearing returning to normal after a few minutes or hours), while another study may be looking for “behavioral thresholds” (the sound exposure that triggers behavioral changes). Implicit in the first question is the thought that as long as the effect is not permanent, it’s acceptible; converstly, the second question implies a desire to minimize disturbance of animals. Indeed, the first question seeks the maximum sound level we can feel comfortable imposing, while the second inquires as to the minimal sound that the animal might be affected by.

More to the point, though, many or most of the studies that do show effects are somewhat ambiguous (e.g., only a proportion of the population shows the effect, or the practical import of the change or effect is difficult or impossible to determine), while a finding of “no effect” is more clear-cut. It is not outlandish on the face of it for the Navy to say, as it does, that their actions are not likely to cause any major disruption of animal life: the only clear-cut evidence we have is that extremely loud sounds at very close range (tens of meters) can injure animals, while the rest of the research really is shades of grey. Nearly all of the controversy over military and oil and gas noise today boils down to differing interpretations of how important moderate behavioral changes are, and whether they should be avoided or not. And science is nearly incapable of shedding any definitive light on how important behavioral changes are, thus leaving the two sides largely reliant on their divergent faith: the Navy and oil industry’s faith that the behavioral changes are transient and negligible, and environmentalists’ faith that chronic behavioral disruption by human noise is bound to have negative consequences. Meanwhile, ethical questions about humanity’s relationship to the natural world are outside the bounds of discussion on one side, and central to the whole discussion, on the other. In the end, studies like this – and indeed, reviews such as those considered here – are largely diligent exercises in quantifying an issue that has become, for all practical purposes, an exercise in divergent world views talking at and past each other.